Testing Coreference Resolution Systems without Labeled Test Sets

Image credit: Unsplash

Image credit: UnsplashAbstract

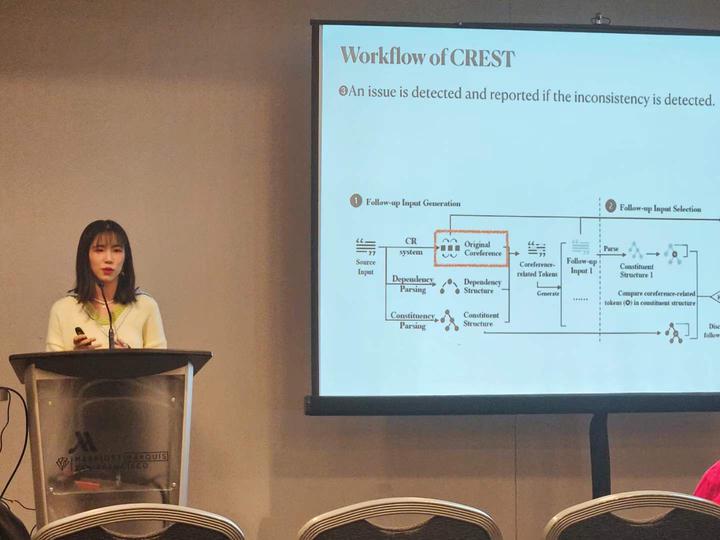

Coreference resolution (CR) is a task to resolve different expressions (e.g., named entities, pronouns) that refer to the same real-world en- tity/event. It is a core natural language processing (NLP) component that underlies and empowers major downstream NLP applications such as machine translation, chatbots, and question-answering. De- spite its broad impact, the problem of testing CR systems has rarely been studied. A major difficulty is the shortage of a labeled dataset for testing. While it is possible to feed arbitrary sentences as test inputs to a CR system, a test oracle that captures their expected test outputs (coreference relations) is hard to define automatically. To address the challenge, we propose Crest, an automated testing methodology for CR systems. Crest uses constituency and depen- dency relations to construct pairs of test inputs subject to the same coreference. These relations can be leveraged to define the meta- morphic relation for metamorphic testing. We compare Crest with five state-of-the-art test generation baselines on two popular CR systems, and apply them to generate tests from 1,000 sentences randomly sampled from CoNLL-2012, a popular dataset for corefer- ence resolution. Experimental results show that Crest outperforms baselines significantly. The issues reported by Crest are all true positives (i.e., 100% precision), compared with 63% to 75% achieved by the baselines.